Can AI Tell What’s Funny? Teaching Humour to Machines

On the school run, my kids were reading jokes from a book—deciphering the wordplay, spotting the misdirection, and savouring the pure ridiculousness. It got me thinking: how do we learn humour, and how do machines even begin to understand it?

What Makes a Joke Tick?

- Wordplay swaps sound‑alikes into punchlines.

Q: What’s the best time to visit the dentist?

A: 2:30 (tooth ’urty) - Absurdity leans on the ridiculous.

Q: Why do elephants paint their toenails red?

A: So they can hide in cherry trees.

Learning a language is hard enough; adding our peculiar humour makes it harder. Jokes break rules on purpose. That’s exactly where language models usually lean the other way.

How Language Models Learn (and Why Jokes Are Tricky)

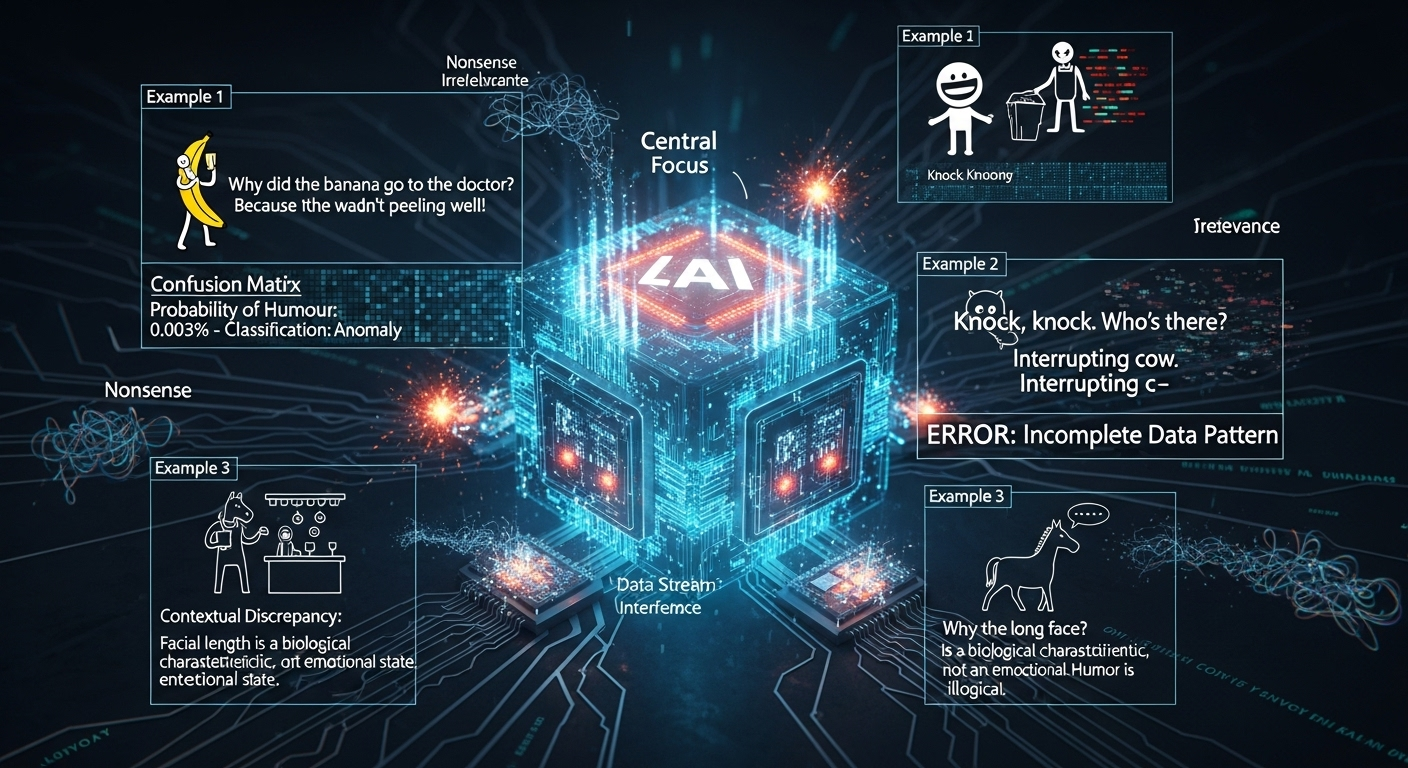

Language models learn by tracking associations between words—predicting what comes next over billions of examples. They capture patterns in grammar, facts, and relationships. But the ‘rules’ that make a joke funny often violate those patterns while still making sense. A set‑up primes a pattern; a punchline flips it. Will a model try to “correct” a joke as bad text—or recognise it as humorous?

Search engines used to rely on metadata: “this page contains jokes.” In a novel there’s no tag for “this bit is funny.” Shakespearean comedy has commentary to lean on, but does a model genuinely understand why a line lands? Or is it just good at echoing analysis?

A Quick Test: Candle + Puppet Prompt: Create a joke about a candle and a puppet.

Perplexity’s AI:

Q: Why did the candle refuse to hang out with the puppet?

A: Because every time things got heated, the puppet just melted under the pressure!

GPT‑4o:

Q: Why did the candle break up with the puppet?

A: Because it got tired of waxing poetic while the puppet just stringed it along!

The second ties both subjects neatly—“waxing” and “string”—and feels closer to the target.

Original or not, it was faster than me. And that’s the real utility: generative AI helps you explore options. A best man’s speech. A lighter line for a presentation. Or just the right words when a thesaurus isn’t cutting it.

It won’t make me a stand‑up. It might make me clearer—which is hardly a bad outcome.

And Here’s the Rub: Trust, but Verify

The more we use generative AI, the more we need to check what it says. A joke is a small, safe sandbox; a paragraph proposing a strategy, a policy, or a technical fix is not. When we ask for arguments and solutions, we still have to read them—slowly—to make sure they match our intent, use sound reasoning, and don’t quietly smuggle in assumptions we wouldn’t sign off.

With code, the stakes rise again. Validating generated code isn’t just “does it run?”—it’s “does it belong here?” You have to understand how pieces fit, whether the approach aligns with your architecture, what happens under edge cases, and whether a neat new function quietly breaks a dependency three layers down. It’s less copy‑editing, more marking someone’s homework you’ve never seen before: understand the brief, check the logic, test the outputs, and make sure nothing else falls over.

A Practical Way to Use AI (Without Regretting It)

Treat AI like a fast intern with encyclopaedic recall:

- Great for first drafts, options, and reminders of patterns you might have missed.

- Not a substitute for judgement, testing, or accountability.

Keep humans in the loop—editorial sense for prose, unit and integration tests for code, and a habit of asking “does this actually solve the problem?” Used that way, AI becomes a lever, not a liability. And that, funny or not, is something worth using.